Bryson Jones

Working on Robot Learning and Spatial Intelligence

About Me

Hello! I'm Bryson.

I'm a technologist interested in robot learning and spatial intelligence. I've built robots, rockets, racecars, and the software that designs and controls them over the past few years. These days I spend most of my time working on robot learning, world models, and dexterous manipulation.

Most recently, I am the founder and CEO of Adjoint, a company focused on building autonomous robotics for skilled trade tasks to support our national shortage in manufacturing labor.

Below are some of the projects I've been fortunate enough to work on, explore, and contribute to over the past handful of years. All of my work revolves around the common theme of building intelligent systems for the physical world, and building the most inspirational projects I can.

If you're interested in chatting or working together, feel free to reach out to me at bkjones97@gmail.com.

Companies, Projects, Contributions, and Beyond

Adjoint

Today, we are at the edge of a cataclysmic skilled labor crisis driven by an aging workforce, off-shored manufacturing, and low-birth rates.

Without adjusting our course, we are in for a rude awakening in society and economically.

The only way through is to hyperscale labor productivity at a rate never seen before. "Knowledge work" jobs are starting to experience this with the onset of AI workflow automation, but skilled labor jobs have seen little worker productivity gain, and frequently losses over the past three decades.

To solve this, we are leveraging recent breakthroughs in AI-driven dexterous manipulation, allowing robots to mimic tasks that historically have required technicians with decades of experience.

Our mission is to develop full-stack autonomous robotic systems to automate every class of skilled labor work, and with our first market being composites fabrication, like carbon fiber and fiberglass.

Multitask Diffusion Transformer Policy

Open-Source Release

This method combines the highly scalability Diffusion Transformer (DiT) architecture with the high expressive robot manipulation Diffusion Policy representation. A similar method was demonstrated on the Boston Dynamics Atlas Humanoid robot, but there was no open-source code or model release for the method.

Given how performant the model appeared to be, I sought to build an implementation for it from scratch. This model ended up being able to achieve a high-level of performance on a variety of manipulation tasks with only 10-20 hours of training time on an H200.

I partnered with the HuggingFace team to release the model as an open-source policy integrated into the LeRobot project.

You can find the release and technical write-up here:

- Blog Post: Dissecting and Open-Sourcing Multitask Diffusion Transformer Policy (2025) (Draft In-Progress as of December 2025)

- GitHub Repo: here

- LeRobot Release: here

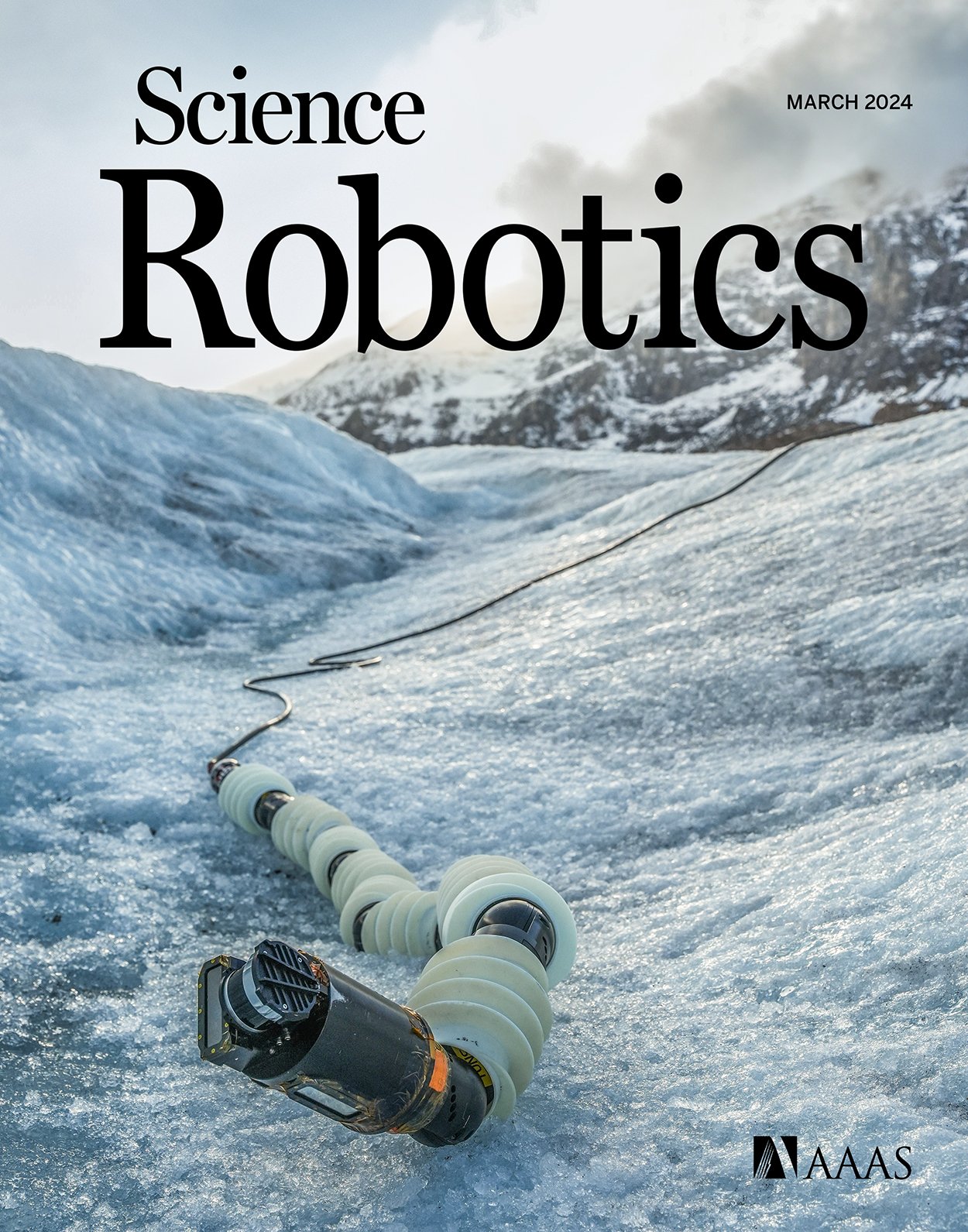

EELS Robot @ NASA JPL

The EELS project was a technology demonstration effort at JPL to develop a platform capable of operating autonomously for a mission on Enceladus, one of the icy moons of Saturn. The robot was designed to be a high-DOF snake robot so that it could adapt to the various contours of the icy terrain.

I led controls software and locomotion autonomy development to enable the robot to climb up and down icy crevasses (i.e. cracks) in the surface of the ice.

My work was a mix of full-stack robotics software engineering, optimal control, and reinforcement learning research to develop different capabilities for the robot.

The project culminated in a month-long field test campaign, where we helicopter-deployed the robot onto a glacier in Canada, and demonstrated it navigating on the ice surface and autonomously climbing down into icy crevasses. We made the cover of Science Robotics magazine for the March 2024 issue.

You can find more information about the project here:

- NASA JPL Project Page: EELS Robot

- Journal Article: Science Robotics (March 2024)

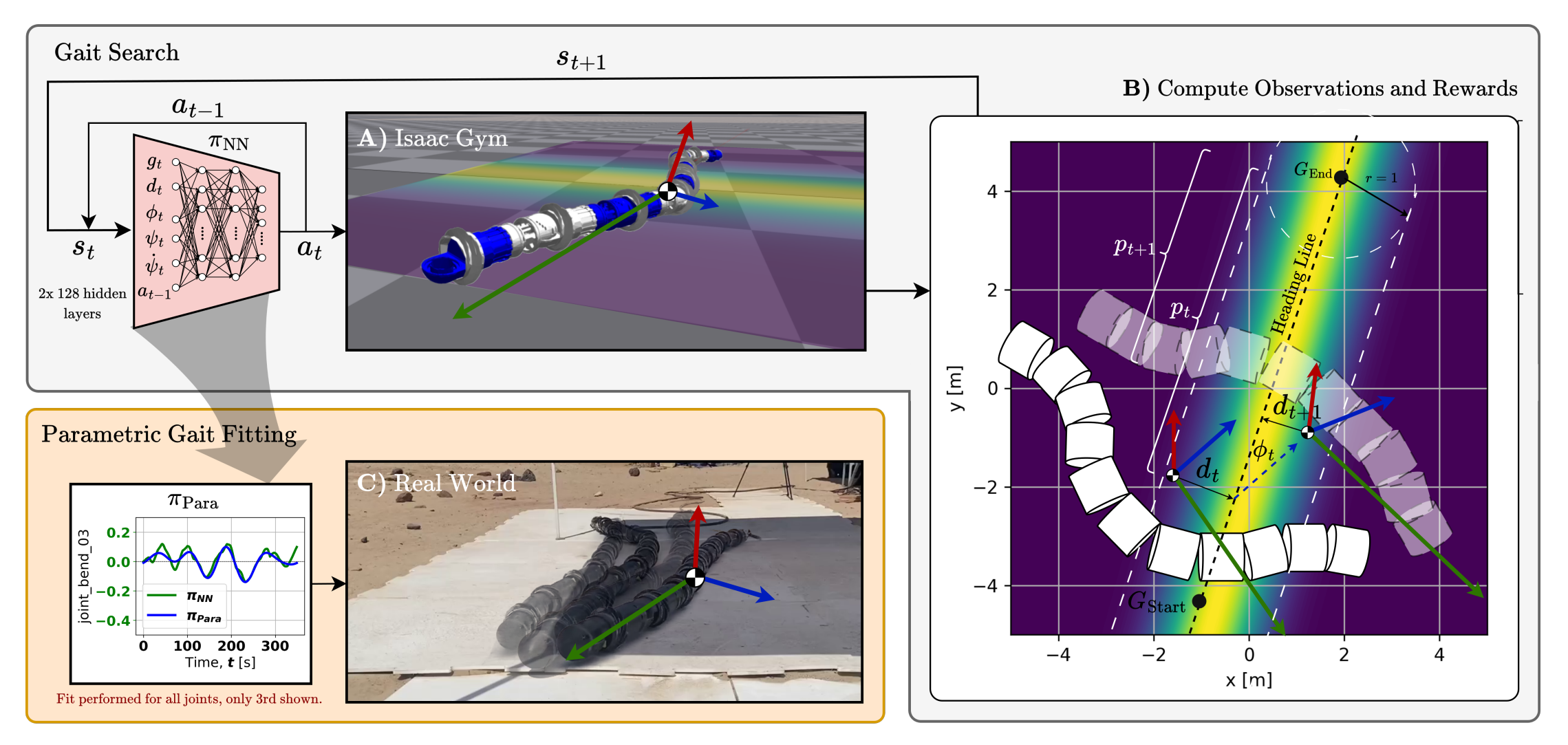

EELS RL

Reinforcement Learning for Snake Robot Locomotion

I built RL training pipelines for snake robot locomotion using NVIDIA Isaac Sim, enabling massively parallel policy rollouts and hyperparameter tuning.

The core challenge was searching a non-convex space for a sidewinding locomotion gait. This is a problem fundamentally intractable with classical control methods due to its non-convex nature and the robot's high degree of freedom (up to 48 actuators).

One part of the work resulted in a publication at L4DC 2024: Reinforcement learning-driven parametric curve fitting for snake robot gait design.

Relativity Space

Lead Robotics Software Engineer - R&D

At Relativity, I managed a team of robotics software engineers and led the technical direction for autonomy on our manufacturing robots.

My main technical contributions included designing and building the real-time control framework for device management on RTOS, and implementing the company's first deep learning pipelines for defect detection. These pipelines monitored X-ray images of 3D printed launch vehicle structures to identify cracks and porosity.

Our team also performed research leveraging GNN models inspired by MeshGraphNets to accelerate thermal-mechanical simulation runtimes by multiple orders of magnitude.

CMU - Robotics Institute - Master's Project

ADAPT: Autonomous Driving for Adverse Perceived Terrain

The ADAPT system was a full stack robotic system with a 1/4 scale electric vehicle with stereo vision and LiDAR sensors, onboard a custom built electronics board, all powered by our autonomy stack. The system was able to detect, segment, and navigate through adverse terrain conditions, specifically icy and wet patches.

I was the project lead, working with four other graduate students to architect, build, and test the system. My main focus was on vehicle planning and controls, while also assisting with the classical and deep-learning based perception stack.

The final system was an end-to-end self-driving stack that could navigate to given goal waypoints